MIT Researchers Aim to Teach Aircraft Carrier Drones to Read Hand Gestures

Right now, efforts are underway to bring the airborne military drones that have been so widely used in overland conflicts onto aircraft carriers. There’s a number of enormous challenges to be met first, not the least of which is how these robotic fliers will interact with humans on the decks. Looking to solve that problem, and improve natural human-machine communication while he’s at it, is Yale Song, a Ph.D. student at the Massachusetts Institute of Technology who has begun teaching a computer to obey the hand signals used by aircraft carrier crews to communicate with pilots.

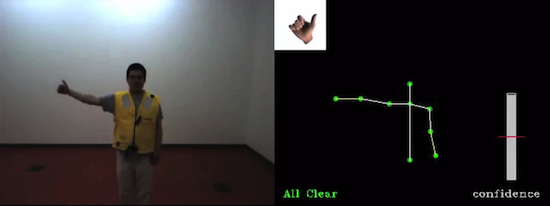

For a computer to recognize a hand gesture, it has to consider two thing. The first, is the physical positioning of the human body. Thankfully, we’ve become rather adept at determining where an elbow is or if someone’s hand is open thanks to projects like the Kinect. The second challenge, determining when a gesture begins and ends, is far more difficult.

Song recognized that aircraft crews are in constant motion, and pack several gestures into a complex series of movements. Simply capturing their movements and analyzing afterward would take too long, so he tried a different method that would decode the gestures as the they came. Song’s process looks at 60 frame sequences, or about 3 seconds of video, at a time. No single sequence contains a whole gesture, and a new gesture can begin midframe.

This is where things get clever: Song’s approach calculates the probability that a single sequence belongs to a particular gesture. It then uses that information to calculate a weighted average of successive sequences, finally deciding what is the most likely message being conveyed.

Using a library of 24 gestures recorded by 20 people, Song’s system was able to correctly identify the gestures 76% of the time. That’s pretty impressive, though the system Still struggles with fast or erratic gestures. It’s also worth noting that because it relies on a limited number of gestures in the system’s vocabulary and couldn’t comprehend a novel series of gestures — no matter how simple or logical they would appear to a human being.

Though 76% accuracy is great in the lab, it’s still not high enough for when human lives and enormously expensive pieces of military equipment are at stake. Song’s primary goal at this point is to improve the system’s accuracy, and streamline the computational processes involved. From MIT news:

Part of the difficulty in training the classification algorithm is that it has to consider so many possibilities for every pose it’s presented with: For every arm position there are four possible hand positions, and for every hand position there are six possible arm positions. In ongoing work, the researchers are retooling the algorithm so that it considers arm position and hand position separately, which drastically cuts down on the computational complexity of its task. As a consequence, it should learn to identify gestures from the training data much more efficiently.

Song also says that he’s working on a feedback method, so that the robot could indicate to the gesturer whether or not it understood the message. One hopes this will be through either nodding or shaking a motorized camera.

(MIT News via Naval Open Source Intelligence)

- DoD wants double the drones

- Did Iran “hack” a U.S. drone?

- I bet the Texas police wish they could have gestured to their drone

- The X-47b done may one day be reading your hands

Have a tip we should know? tips@themarysue.com