AI is coming for all of us whether we like it or not. It is a major concern for the WGA and the SAG-AFTRA strikes, journalists are combating AI-generated articles, and even established directors like Christopher Nolan have concerns about it. At a recent early screening of Oppenheimer, the latest movie from Nolan that explores the creation of the atomic bomb, Nolan talked about the problems that exist around AI and its quick growth.

“The rise of companies in the last 15 years bandying words like algorithm—not knowing what they mean in any kind of meaningful, mathematical sense—these guys don’t know what an algorithm is,” Nolan said at the screening. “People in my business talking about it, they just don’t want to take responsibility for whatever that algorithm does.”

An algorithm is something that is unpredictable. Think about your own social media use. It monitors what you do, what you click on, and curates your feed to that. Often, we claim that the algorithm “hears” us because things we say suddenly pops up on our feeds without ever typing it in. All of that is rightfully terrifying on its own given the state of our world and how quickly AI is changing things. When you add in the future of Hollywood and the studios’ eagerness to capitalize on this technology in arguably unethical ways, and you can see the trickle-down problem of, as Nolan points out, no one taking accountability.

The future of AI and the horrors there

Right now, a lot of people think of AI as something ranging from useful to frivolously entertaining—a tool to make funny artwork or to do their homework. But there are majorly troubling issues that we’re already seeing with AI—like training it on the work of real people, letting it steal from them—and there is potential for real danger as the technology advances. Nolan went on to talk about applying AI to not only Hollywood but more importantly (and in line with his film) the use of nuclear weapons.

“Applied to AI, that’s a terrifying possibility. Terrifying,” Nolan said. “Not least because, AI systems will go into defensive infrastructure ultimately. They’ll be in charge of nuclear weapons. To say that that is a separate entity from the person wielding, programming, putting that AI to use, then we’re doomed. It has to be about accountability. We have to hold people accountable for what they do with the tools that they have.”

Nolan is completely right. It is why AI is so terrifying across the board. We cannot continue to let AI walk all over writers, creatives, and a plethora of other industries and just wait until it becomes too late because, as Nolan points out, if we continue to let it go unchecked, we don’t know what the future looks like regarding everything from art to nuclear weapons. And that should scare us all.

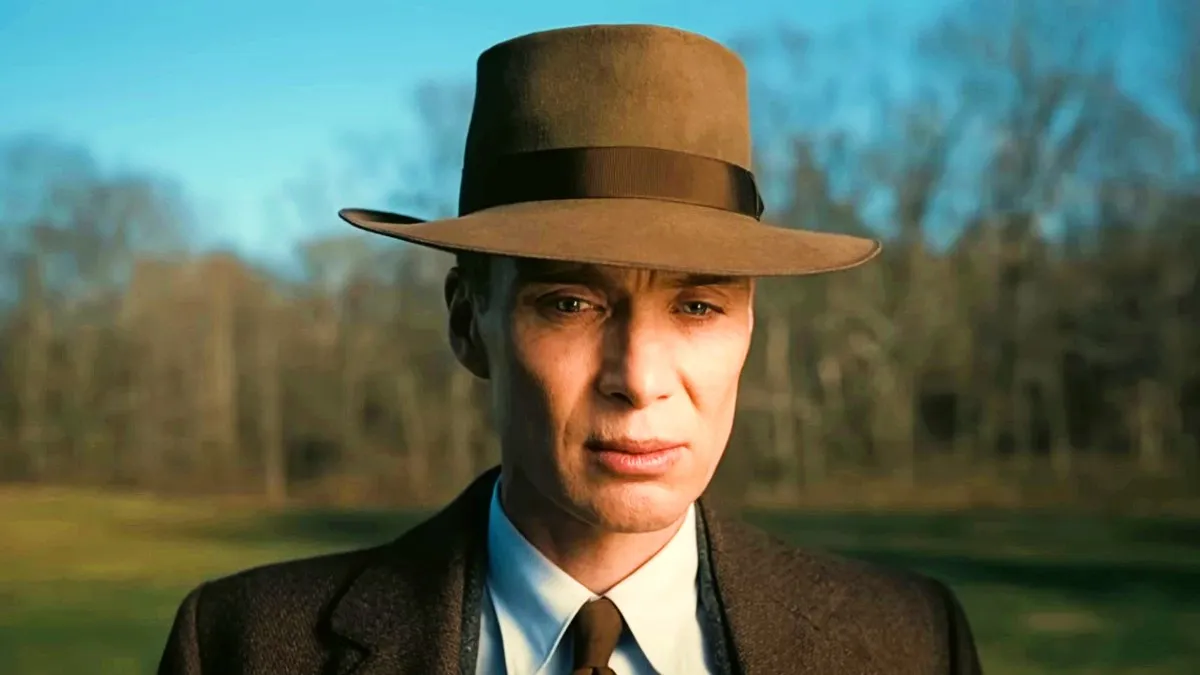

(via Variety, featured image: Universal Pictures)

Published: Jul 17, 2023 05:51 pm