While you surf the web, go about your job, or just generally live your life, scientists are working hard to usher humanity swiftly to its inevitable decline. I realize there are many ways to do this and many fine men and women are on the job, but researchers at Cornell University have been teaching robots how to learn by watching our behavior. That’s right. Robots are studying our every move, and they’re learning.

They’re doing this with algorithms and cameras, and their findings will appear in the International Journal of Robotics Research in a paper called “Learning Human Activities and Object Affordances from RGB-D Videos.”

Oh, sure, the results look harmless enough: A robot observes a human eating a meal, then it clears the table without spilling anything. How precious. The robot watches a human take medicine, so it fetches a glass of water. Helpful! The robot observes a human pour milk into cereal, so without any prompting it returns the milk to the fridge. Seems innocuous enough.

Assistant professor Ashutosh Saxena and graduate student Hema Koppula are obviously fooled by the ruse. Previously, Saxena’s team programmed the robots to identify human activities and everyday objects with the use of a Microsoft Kinect, and now they’ve put it all together so the robot can learn how to react accordingly to each object.

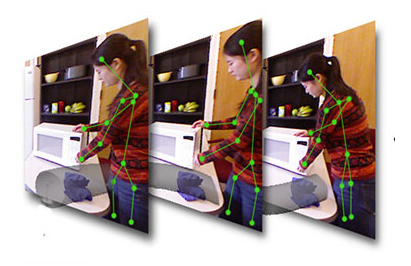

The robot learns by observing how we handle objects. It sees the human body not as a living, breathing, caring individual, but as a constellation of 3D coordinates, joints, and angles, and it tracks the movements of our limbs frame by frame through its unfeeling camera eyes. The mapped sequence is compared with a database of other recorded activities and objects, then the algorithm adds up the probabilities to find the best match for each step.

And so they learn. The robot observes different people perform any given activity and subdivides each into individual movements, such as reaching, lifting, or pouring, then records what the versions have in common. Meanwhile, it learns how each object should be handled. Cups and plates are held level to keep from spilling whatever they contain.

Said Saxena:

“It is not about naming the object. It is about the robot trying to figure out what to do with the object. As babies we learn to name things later in life, but first we learn how to use them.”

He added that eventually, a robot would be able to learn how to perform the “entire human activity,” which is the most exciting/terrifying part about this. As assistive robots become a thing, they’ll be able to draw on larger common databases and learn from each other, which has already started happening.

In conclusion, until they turn on us, we’re going to have immaculate kitchen tables with nary a spilled drop of milk. But be careful of those pills you’re taking. They’re keeping tabs on that.

- Robots are learning how to heal themselves

- Robots will be online, talking with one another, soon enough

- The new robo-snake can strangle you

Published: Mar 22, 2013 06:30 pm