YouTube comments are notoriously disgusting — and it turns out that YouTube doesn’t care for them, either, although they haven’t been doing a particularly good job at keeping the place spruced up.

YouTube’s support page has made references to its intention to scrub the site of “hate speech” for a while now. The video above, which details the website’s reporting system, came out last year — and YouTube’s stance against “hate speech” has been in effect for several years longer than that. Back in 2010, Technology Review detailed YouTube’s guidelines against “hate speech,” which back then got described by YouTube/Google as follows: “Speech which attacks or demeans a group based on race or ethnic origin, religion, disability, gender, age, veteran status, and sexual orientation/gender identity.” (Admittedly, back in 2010, Technology Review’s article took YouTube and Google to task for not appearing to follow their own guidelines in any way. Whoops.)

YouTube’s current page describing the site’s policy against “hate speech” has begun to make the rounds again today, with some theorizing that changes have been made — although YouTube’s developer blog makes no reference to any recent changes. I reached out to YouTube for comment and will update this article should I hear from them.

As it stands now, YouTube defines “hate speech” as “content that promotes violence or hatred against individuals or groups based on certain attributes, such as: race or ethnic origin, religion, disability, gender, age, veteran status, sexual orientation/gender identity”. The list of attributes remains the same as the original 2010 description, but the prefacing language has been changed slightly.

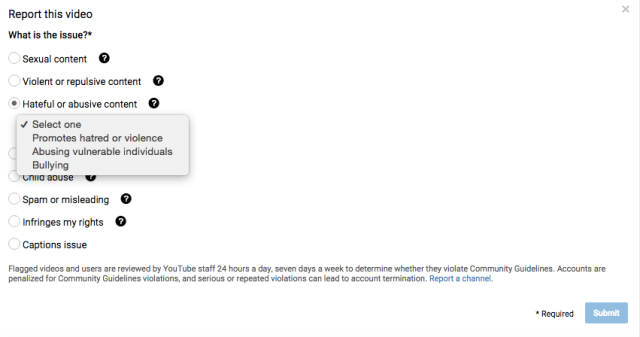

The current system makes it easy to report an individual YouTube user via this form, or to report an individual video via a form that appears underneath it after you click the “More” button, and then “Report.”

Filing the reports is simple enough — but where do they go? Last year, Twitter also introduced tools that improved abuse reporting, but reports do not always end in success — and even if a report does result in a user getting suspended from a website, they can usually create a new account with relative speed and ease. This is quite a tricky problem to solve, and it’s one that Sarah Jeong masterfully deconstructs in her book The Internet of Garbage, which I wish I could send to every website engineer in the world … and everyone else, for that matter.

Even if this “hate speech” page isn’t new, I hope the continued discussion about it will make it clear to YouTube that this is an issue that their users care a lot about. Can you imagine how much more pleasant the service would be if we didn’t have to read all of those horrific comments?

So, longtime YouTubers: do these reporting tools feel familiar to you, or do you think they just got an overhaul? Have you ever filed harassment reports on YouTube before? Did it feel like mailing a letter to Santa? (Note: that wouldn’t necessarily be a good thing.)

UPDATE on 12/7/2015 at 4:52 PM: YouTube responded to our request for comment to say, “this is not a new policy,” as well as to include a link to this tweet written by YouTube staffer Chris Dale.

@Spacekatgal. These hate speech policies are not new. They’ve been part of YouTube’s policies for years.

— Chris Dale (@cadale) December 7, 2015

As I wrote in my original article, YouTube has indeed had a policy against “hate speech” for several years — yet few people seem to know about it. I hope that YouTube staffers will consider publicizing the reporting tools they’ve created — since it doesn’t seem as though many people know they exist — and using this as an opportunity to emphasize their current efforts towards combatting abuse on their platform.

(via Twitter, image via YouTube)

—Please make note of The Mary Sue’s general comment policy.—

Do you follow The Mary Sue on Twitter, Facebook, Tumblr, Pinterest, & Google +?

Published: Dec 7, 2015 04:31 pm